Borges points out that Zeno's parable of Achilles and the tortoise, which neatly encapsulates his (Zeno's) paradox, is Kafkaesque. That is, without Kafka, there is no Zeno. Even the title of this post, a direct quote from Kafka, is Kafkaesque. Does he mean that there is an infinite amount of "hope" in the world, but we can't have it? Or does he mean that there's plenty of hope in the world, but that we are hopelessly doomed as a species? Or both? Or neither?

Kafka's work has also been referred to as the "poetics of non-arrival." Many of his characters fail to reach a destination (or wake up as giant cockroaches and get starved by their families--same diff). In class this week we read Before the Law, whose very title launches a series of questions. "Before" in what sense? Temporally? Spatially? Both? Neither? And which "law" does he mean? Religious? Secular? Moral? Scientific? All? None? You get the point.

In case you haven't read the story, and you should since it's only a page long, here's a summary. A man comes from the country to see the law. He encounters a gatekeeper who tells him he can't enter at this time but it might be possible later. He also implies that even if the man does pass through this door that there is a succession of doors and gatekeepers, each more terrible than the last, so that in some sense he may as well not bother. Well, the man just sits there for years. He tries bribes. He asks the gatekeeper lots of questions. He grows old and even resorts to imploring the fleas in the gatekeeper's fur collar to answer his pleas. In the end, he dies having never passed the first door (and there's a version of Zeno's paradox that goes this way--being unable to take the first step). Non-arrival, indeed.

This class is about mathematics as metaphor in literature, and many of Kafka's works make use of the infinite in one form or another (more on that next week). But what about literature as metaphor for mathematics? If ever there were a Kafkaesque branch of math it would have to be Cantor's work on the infinite. Before Cantor, everyone more or less assumed that infinity is infinity; that is, there is only one level of infinity, or more accurately that all infinite sets have the same cardinality. Cantor demonstrated rather dramatically that this is false. In fact, a consequence of his work is that there is an infinity of infinities, each larger than the last.

If you've never thought about this before it can be really counterintuitive and difficult to accept, but I imagine Kafka, who claimed to have great difficulties with all things scientific, would have appreciated the mathematical abyss Cantor opened up for us. I do not use the term abyss lightly--Cantor was attacked and mocked by his contemporaries, often viciously, and this fueled his depression and ultimately led to multiple hospitalizations for treatment; he died poor and malnourished in a sanatorium in 1918. Poincare referred to Cantor's work as a "grave disease" infecting mathematics; Wittgenstein dismissed it as "utter nonsense." But Cantor's ideas survived and are considered fundamental to mathematics today.

So just how weird are we talking here? First a question: What is an infinite set? A set that isn't finite, right? OK. Definition 1. A function \(f:A\to B\) is a bijection if the following two conditions hold: (a) \( f\) is injective; that is, if \(a_1\ne a_2\) are distinct elements of \(A\) then \(f(a_1)\ne f(a_2)\); and (b) \(f\) is surjective; that is, if \(b\in B\) there is some \(a\in A\) with \(f(a)=b\). Definition 2. A set \(S\) is finite if there is a bijection \(f:S\to\{1,2,\dots ,n\}\) for some \(n\ge 0\). In this case, we say that \(n\) is the cardinality of \(S\). This notion of size is well-defined, but that requires (a simple) proof.

Now, let's denote by \({\mathbb N}\) the set of natural numbers, \(\{0,1,2,3,\dots\}\), where the ... means go on forever. These are the numbers we use to count and we know there are infinitely many. In some sense, this is the simplest infinite set there is. Definition 3. A set \(S\) is countably infinite if there is a bijection \(f:S\to {\mathbb N}\). You might think that every infinite set is countable, because, you know, infinity is infinity, but you'd be wrong (more on that below). For now, here are some examples of countably infinite sets. The whole set of integers \({\mathbb Z} = \{\dots ,-2,-1,0,1,2,\dots \}\) is countable. Now, wait, you say, there are clearly more integers than natural numbers, twice as many in fact. But all I have to do is produce a bijection. Here's one: \(f:{\mathbb Z}\to {\mathbb N}\) defined by \(f(n) = 2n\) for \(n\ge 0\) and \(f(n) = -2n-1\) for \(n<0\). You can check that this works. The set \(E\) of even natural numbers is countable: take \(f(n) = n/2\) for \(n\ge 0\). Huh? There are only half as many even numbers as there are all numbers. So, we already see that infinity can be weird.

It gets weirder. Let \({\mathbb Q}\) be the set of rational numbers; that is, fractions of the form \(a/b\) where \(a,b\in {\mathbb Z}\), \(b\ne 0\). Of course, there are duplicates when we write them in this form, but we could insist that \(a\) and \(b\) are relatively prime. This set is countable, too. There are clever diagrams that prove this (try looking here, for example), but I will simply list the rationals: \[0,1,-1,\frac{1}{2},-\frac{1}{2},2,-2,\frac{1}{3},-\frac{1}{3},\frac{2}{3},-\frac{2}{3},3,-3,\dots\] It should be reasonably clear how to continue this pattern in such a way that every rational number ends up on the list, and so this is a bijection between \({\mathbb Q}\) and \({\mathbb N}\). Weirder still: the set of algebraic numbers is countable. These are the numbers which are solutions to polynomial equations with integer coefficients. You might think there are a lot of these (well, yeah, there are infinitely many), but they're countable.

OK. So, what about an uncountable set? I claim the set of real numbers \({\mathbb R}\) is uncountable. To prove this, I will show (a) \({\mathbb R}\) has the same cardinality as the open interval \( (0,1)\), and (b) \( (0,1)\) is uncountable. The first one is easy; here is a bijection between \( (0,1)\) and \({\mathbb R}\): \[f(x) = \tan\biggl(\pi\biggl(x-\frac{1}{2}\biggr)\biggr).\] To prove that the interval \((0,1)\) is uncountable, we use Cantor's Diagonalization Argument.

Suppose we had a bijection \(f:{\mathbb N}\to (0,1)\) (we can run our bijections in either direction). That would mean we could put the numbers in \((0,1)\) in a list (using decimal expansions of the numbers): \[0.a_1a_2a_3a_4\dots \] \[0.b_1b_2b_3b_4\dots \] \[0.c_1c_2c_3c_4\dots \] \[0.d_1d_2d_3d_4\dots \] \[\vdots\] Consider the following number \( x\): the \(i\)th digit of \(x\) is \(1 + \text{the}\, i\text{th digit of}\, f(i)\) (here if the \(i\)th digit of \(f(i)\) is \(9\), this means \(0\)). Now, ask yourself: is \(x\) on this list? It can't be the first number since it differs in the first digit; it can't be the second number since it differs in the second digit; it can't be the third or the fourth or the \(i\)th for any \(i\) since it differs from \(f(i)\) in at least the \(i\)th spot. So \(x\) is not on the list; that is, our function \(f\) is not surjective, a contradiction. So no such bijection exists and \((0,1)\) is uncountable.

Now, you might say, well, we can fix that. Just bump everything on the list down one spot and add \(x\) at the beginning. But then we could just do it again to construct a new number that isn't on the list. And so on, and so on, and so on. So there's an infinite amount of hope (to solve this), just not for us.

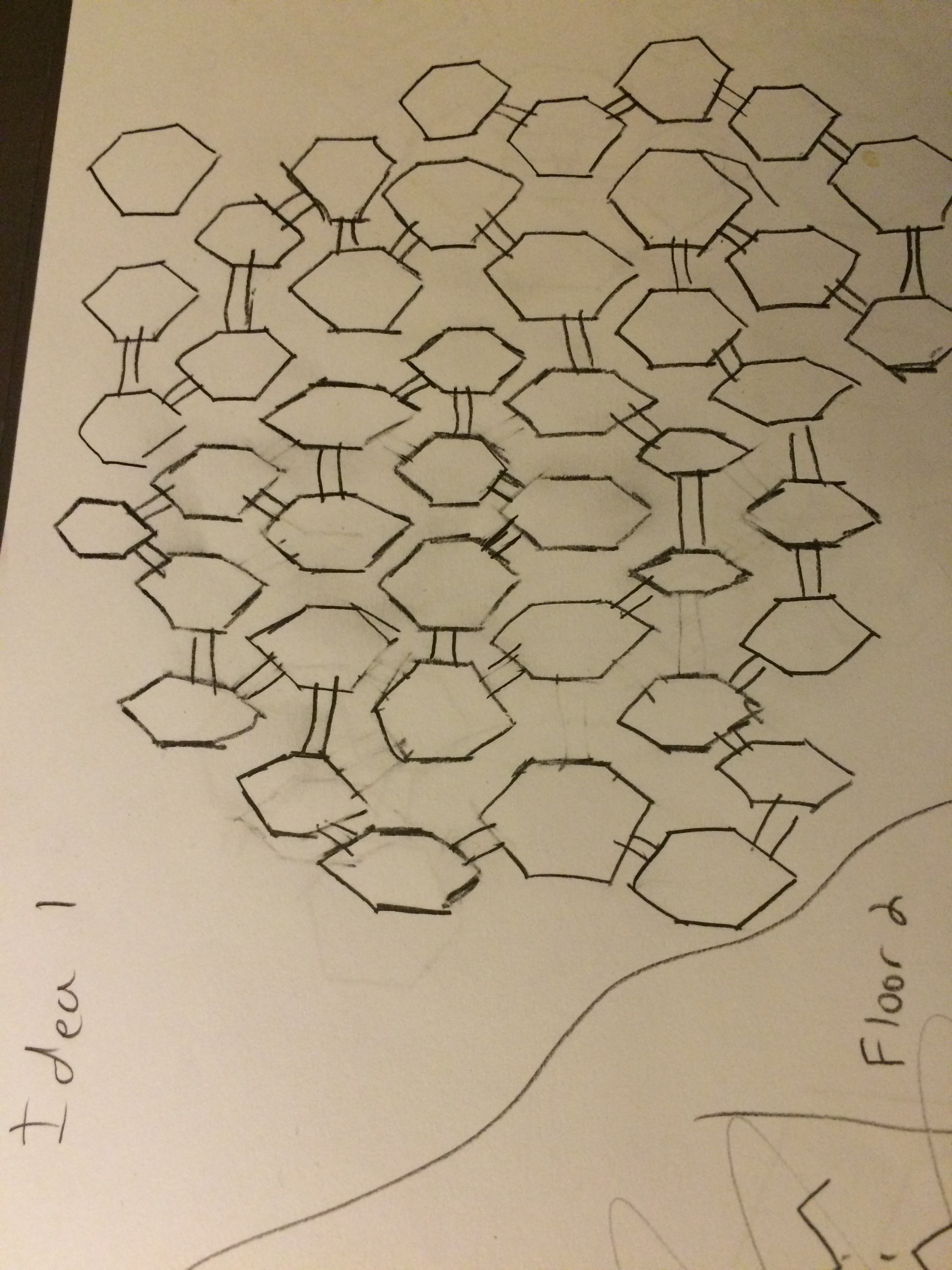

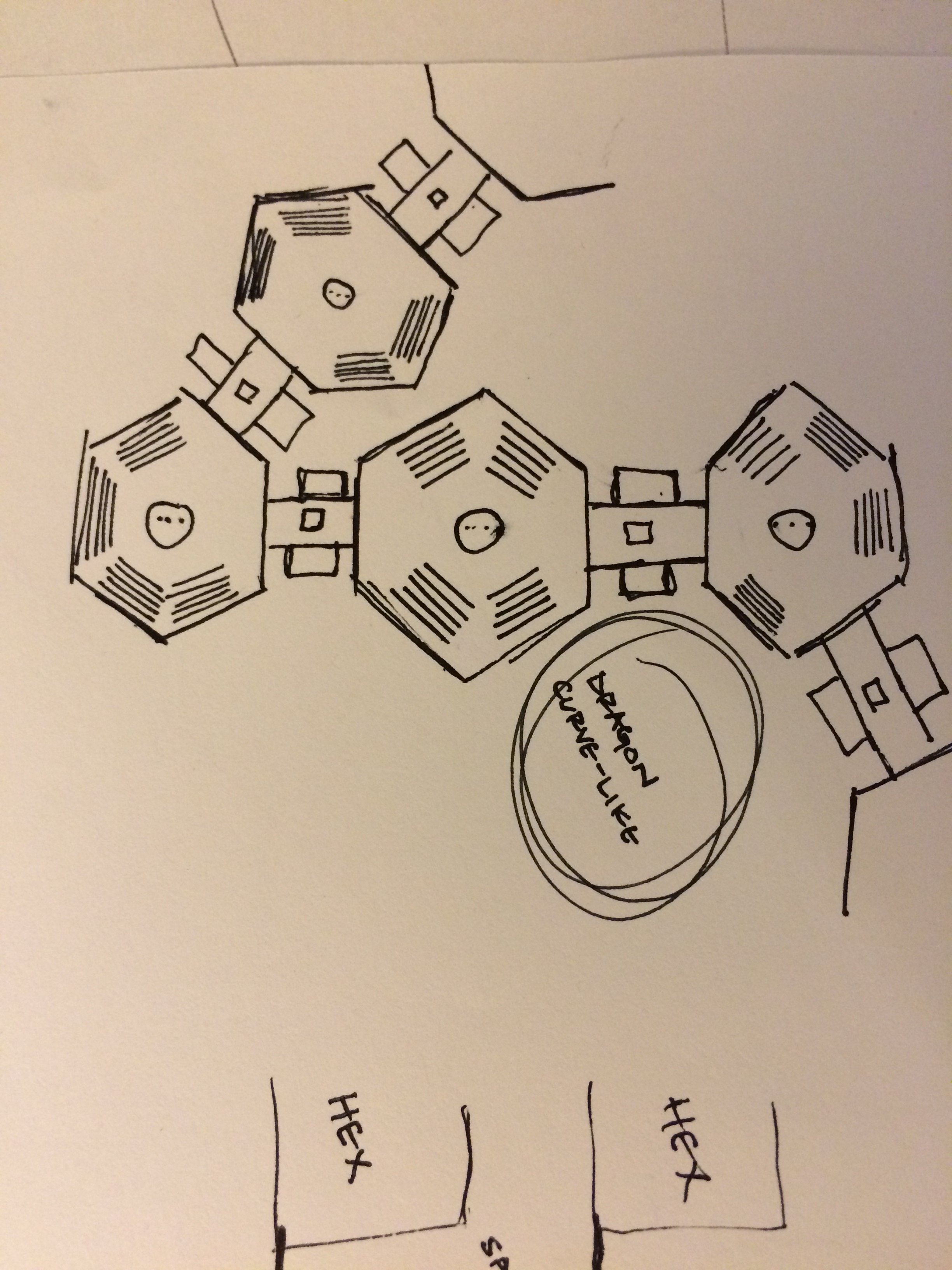

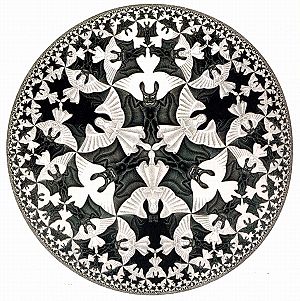

Cantor constructed all sorts of weird stuff, and I'll say more about that next week in relation to Kafka's Building the Great Wall of China. For now, though, let me end by showing how there is an infinity of infinities. This idea has been around for a long time: recall the Hindu story of the earth being held up by an elephant who is standing on a turtle. But what's the turtle standing on? Well, it's turtles all the way down. Or Bertrand Russell's arguments against the existence of God: a standard logical argument is that everything that exists has a cause; the earth exists so it has a cause; that cause is God. But Russell pointed out that God would then have to have a cause, a meta-God of sorts, which would also have a cause (a meta-meta-God) and so on, producing an infinite string of \(\text{meta}^n\)-Gods, each more powerful than the last (Kafka squeals with delight). The trick for producing ever larger sets is the power set construction. It goes like this: let \(A\) be any set. Denote by \(P(A)\) the set of all subsets of \(A\). It is clear that the cardinality of \(P(A)\) is at least that of \(A\) since we may find an injection of \(A\) into \(P(A)\) (the function \(f(a) = \{a\}\) will do). But any such map cannot be a surjection. The trick is to assume you have a bijection \(f:A\to P(A)\) and then build a subset of \(A\) which can't be in the image of \(f\), just like Cantor's Diagonalization Argument. Since I've assigned this as a homework problem, I won't divulge the answer here, but I will say there is some relation to Russell's Paradox.

Anyway, assuming this, we now see how we can get bigger and bigger infinite sets. Start with the natural numbers \({\mathbb N}\) and then iterate the power set construction. The set \(P({\mathbb N})\) must be uncountable and the set \(P(P({\mathbb N}))\) is larger still. This leads to the whole area of transfinite arithmetic, which I don't know much about and won't try to explain, but I think you'd agree must be pretty wild.

If Borges is right that each writer creates his precursors, then I think we have to count Cantor among them.