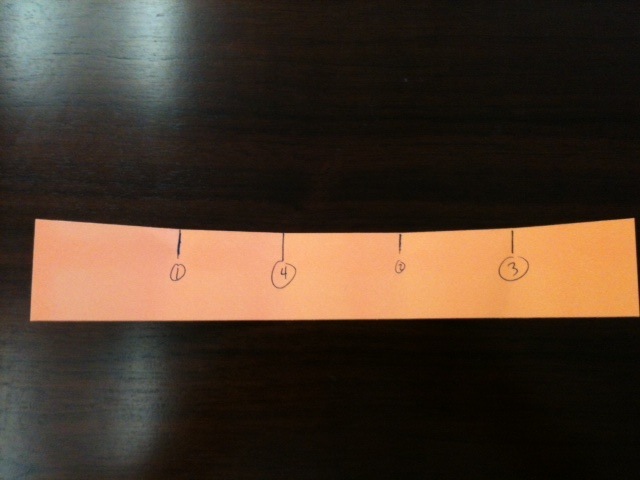

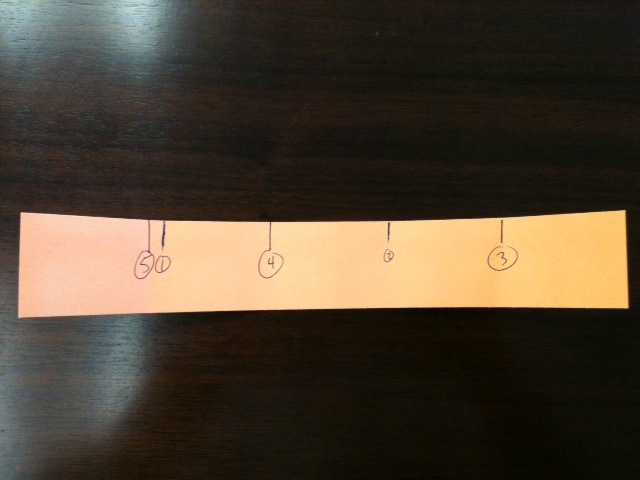

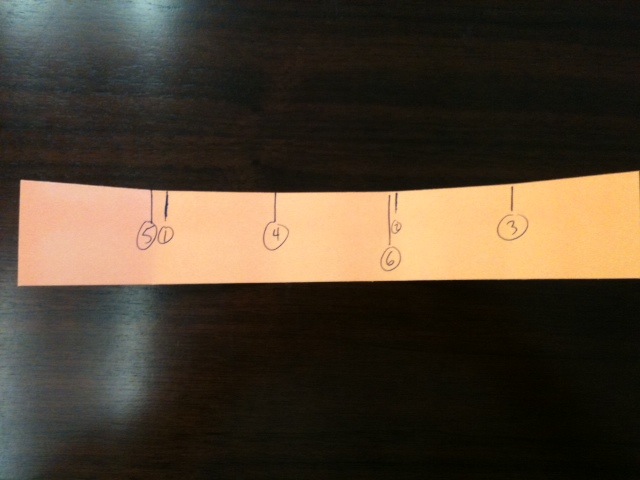

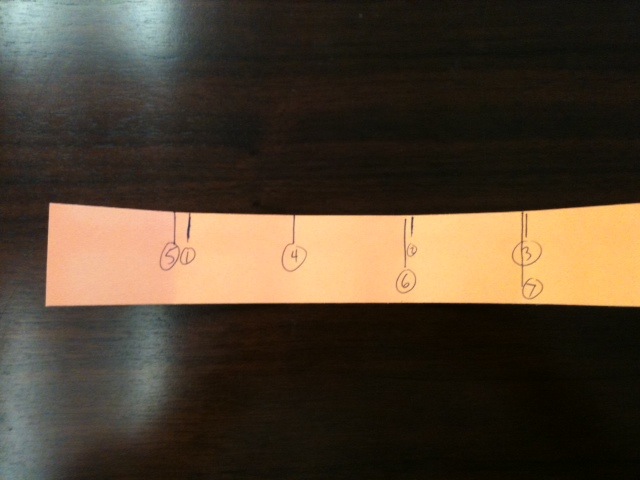

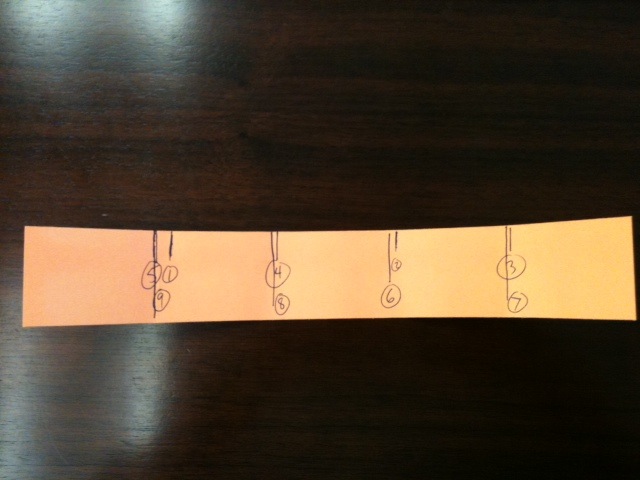

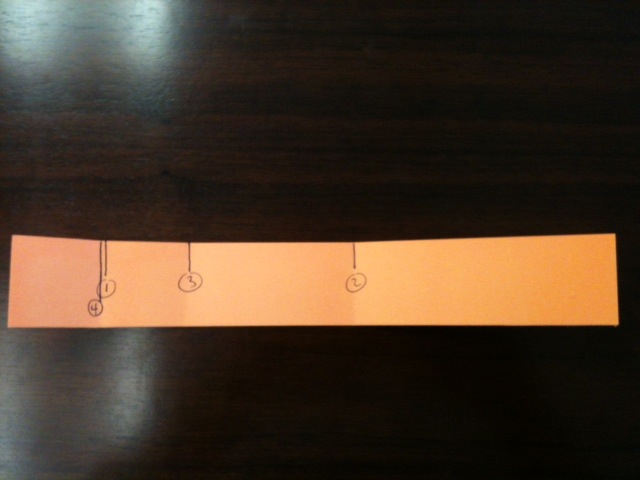

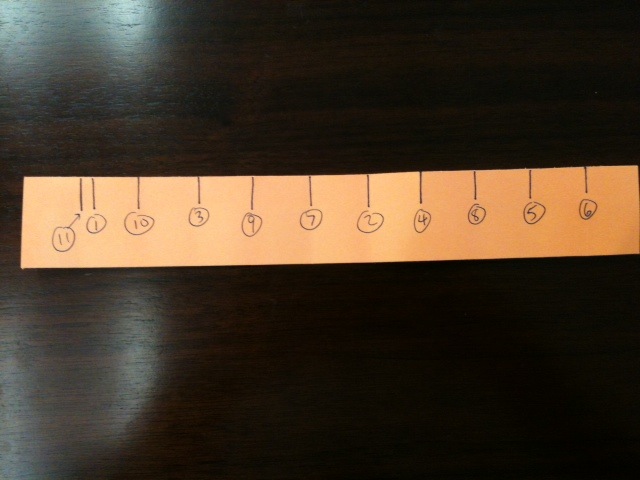

In this case, I made a mark at all \( 10 \) of the subdivisions, coming back to a place near my initial guess. Running through the algorithm again would get me back so close to mark number \( 11 \) that I didn't bother to do it.

Now, here's something we can do. At each stage we do one of two things: fold the right edge to a point in the interior or fold the left edge to a point in the interior. If we keep track of the sequence of folds we get the following.

\( \frac{1}{3} \quad RLRLRLRL\dots \)

\( \frac{1}{5} \quad RRLLRRLLRRLL\dots \)

\( \frac{1}{7} \quad RLLRLLRLL\dots \)

\( \frac{1}{9} \quad RRRLLLRRRLLL\dots \)

\( \frac{1}{11} \quad RLRRRLRLLL\dots \)

There's a connection with this and writing the fraction \( \frac{1}{n} \) in binary. We all know from grade school that \( \frac{1}{3} = 0.3333\dots \) and \( \frac{1}{7} = 0.142857\dots \). Of course, what this really means is that

\( \frac{1}{3} = \frac{3}{10} + \frac{3}{100} + \frac{3}{1000} +\cdots + \frac{3}{10^r} +\cdots \)

\( \frac{1}{7} = \frac{1}{10} + \frac{4}{100} + \frac{2}{1000} + \frac{8}{10^4} + \frac{5}{10^5} + \frac{7}{10^6} + \cdots \)

But there's nothing special about \( 10 \), except that we have \( 10 \) fingers. We could just as well write these fractions with powers of \( 2 \) in the denominators:

\( \frac{1}{3} = \frac{0}{2} + \frac{1}{2^2} + \frac{0}{2^3} + \frac{1}{2^4} +\cdots \)

\( \frac{1}{5} = \frac{0}{2} + \frac{0}{2^2} + \frac{1}{2^3} + \frac{1}{2^4} + \frac{0}{2^5} + \frac{0}{2^6} + \cdots \)

\( \frac{1}{7} = \frac{0}{2} + \frac{0}{2^2} + \frac{1}{2^3} + \frac{0}{2^4} + \frac{0}{2^5} + \frac{1}{2^6} +\cdots \)

\( \frac{1}{9} = \frac{0}{2} + \frac{0}{2^2} + \frac{0}{2^3} + \frac{1}{2^4} + \frac{1}{2^5} + \frac{1}{2^6} + \cdots \)

and so on. Note that for any odd \( n \), we'll always get a repeating "decimal" expansion this way.

Now look closely. If we use the following dictionary: \( R = 1, L = 0 \) and read from right to left beginning at the last digit in the repeating "chunk" we see that the fold sequence tells us the binary expansion of \( \frac{1}{n} \). For example, \( \frac{1}{5} = 0.\overline{0011} \) and the fold sequence is \( RRLL \). Since the fold sequence for \( n=11 \) is \( RLRRRLRLLL \), we find that

\( \frac{1}{11} = \frac{0}{2} + \frac{0}{2^2} + \frac{0}{2^3} + \frac{1}{2^4} + \frac{0}{2^5} + \frac{1}{2^6} + \frac{1}{2^7} + \frac{1}{2^8} + \frac{0}{2^9} + \frac{1}{2^{10}} + \cdots \)

This is a fun fact. So, for any odd \( n \), if you want to know the binary expansion of \( \frac{1}{n} \), you only need to figure out the fold sequence, and really, you don't even need a strip of paper to do it. You only need to think about the fold sequence; maybe use a pen and paper to keep track. For example, if \( n = 2^r + 1 \) for some \( r \), then the fold sequence is obviously

\( \underbrace{RRR\cdots R}_\text{\( r \)}\underbrace{LLL\cdots L}_\text{\( r \)} \)

and so

\( \frac{1}{2^r + 1} = \frac{0}{2} + \cdots + \frac{0}{2^r} + \frac{1}{2^{r+1}} +\cdots + \frac{1}{2^{2r}} \)

Finally, a word about the iterative process. There are exact methods to fold a strip of paper into exact \( n \)ths, but they are (a) very complicated, and (b) not more accurate in practice. Indeed, it is difficult to fold exactly, and errors inevitably creep in. This algorithm works just as well and is much easier to implement.

Happy folding!