Who owned this book? Was it Connie Flynn (a boy)? Or was it Marie David? I'll guess the latter, since boys didn't (don't) usually write such things in their books, and because the handwriting in the various margins is a nice script, more likely to be a girl's. Note also the lighter heart-shaped box with Nancy Canby + -?- -?-, probably Marie's friend looking for love.

The book is from the 1940s. In those days, this is probably about as far as most students got in mathematics in high school. Sure, some would study trigonometry and perhaps pre-calculus, but for most students a second course in algebra was sufficient, even for those planning on going to college. I won't go on my rant about how too many students are being pushed into calculus in high school these days (see the second half of this if you want to read that). Instead, I'd like to write a few words on the virtues of a more careful study of algebra, including the dying art of logarithms.

Back in the 1600s, multiplication was a real problem, especially for astronomical and surveying calculations. The computer didn't exist and wouldn't for another 300 years, so mathematicians and their ilk were forced to perform tedious calculations by hand. It was not unusual for a collection of calculations to take months, just because multiplying two large numbers by hand takes a lot of work. For example, say you need to multiply two 6-digit numbers. Using the algorithm you learned in grade school, this requires 36 separate multiplications and then about a dozen additions to get the final result. Even if you're fast and know your multiplication tables well (which the average 17th century human did not) this will take you a couple of minutes. Now, imagine you're constructing a table of values for something (e.g., planetary motion or sea navigation) and you have to do this a few thousand times. First, your hand will cramp up; second, you are bound to make errors; third, it will take months of hard work.

A crucial insight by John Napier, a Scottish laird, which was refined by the famous English mathematician Henry Briggs, made this problem much more tractable. The insight: addition is easier than multiplication. Well, duh, you say, and so what? The idea is that if you have a fixed base number \( x\), then for any exponents \( a \) and \( b\) we have \[ x^a x^b = x^{a+b}. \]

Now define, for a fixed base \( x \), the base \( x \) logarithm of the positive number \( N \) to be the exponent \( a \) that makes the following equation hold: \[ x^a = N. \]

We write this as \( a = \log_x N \). Now suppose you want to multiply two numbers \( N \) and \( M\). Then, writing \( N = x^a \) and \(M = x^b \), we have \[ NM = x^a x^b = x^{a+b}. \]

Taking the logarithm of both sides of this equation we see that \[ log_x(NM) = a+b = \log_x(N) + \log_x(M). \]

This is magical! We've turned multiplication into addition. This suggests the following procedure: fix a base \( x \), say 10, and calculate the logarithms of a regular selection of the numbers less than \( x\). Create a table of these values. Then to multiply two numbers \( N \) and \( M \), write each of them in scientific notation: \( N = u \times 10^r \) and \( M = v \times 10^s \), where \( u, v < 10 \) and \( r, s \) are integers. Then \[ \log_{10} (NM) = \log_{10} (u) + \log_{10} (v) + r + s, \]

where I've done a little algebra to get this simple form. Look up the logarithms of \( u \) and \( v \) in the table and add them. Then find this sum in the table and go backwards to find the product \( NM \).

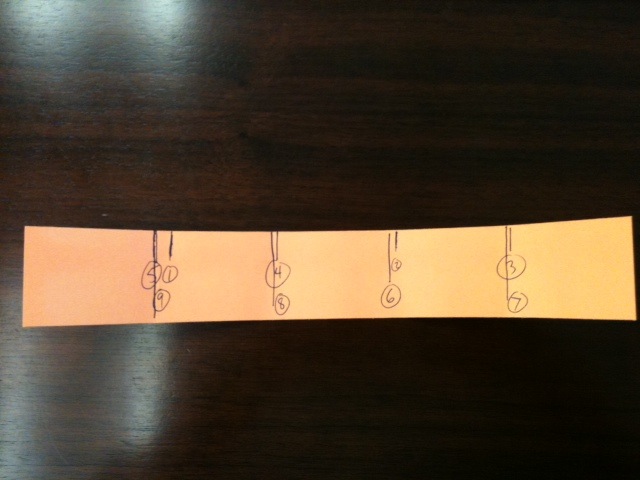

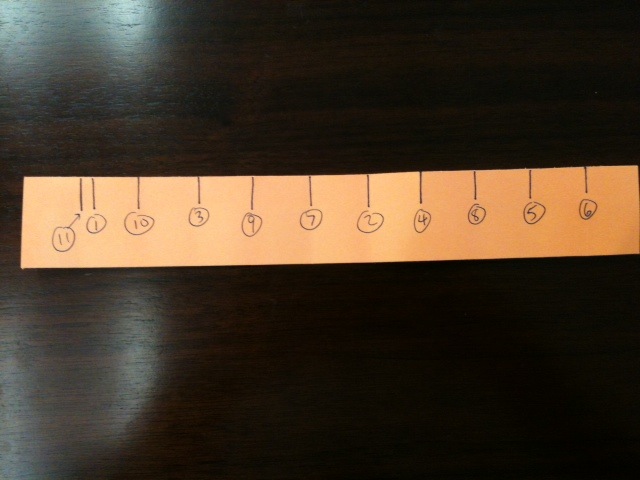

The base 10 (a.k.a. common) logarithm table is the last picture in the gallery above. Also, I love the doodles you can see--the little face on the top near the center, and the girl's face on the right side. Anyway, let's do an example: Let's multiply 2345 by 762. Here's how it goes. We have \( 2345 = 2.345 \times 10^3 \) and \( 762 \times 10^2 \). Find the logarithms of \( 2.345 \) and \( 7.62 \) in the table: \( \log(2.345) = .3701 \) and \( \log(7.62) = .8820 \). Add these: \( 1.2521 \) and then add the exponents 3 and 2 to get \( \log(2345\cdot 762) = 6.2521 \). Look up \( .2521 \) in the table: \( 1.7868 \). Then we get (approximately) \[ 2345\cdot 762 = 1.7868 \times 10^6 = 1,786,800.\]

The real answer is \( 1,786,890 \), so we missed a little bit. Note also that I had to use something called interpolation to deal with the extra decimal places; this invariably leads to errors. The remedy for that is to have more accurate tables.

This was still in the Algebra II curriculum when I was in high school in the mid-80s. No longer. When I teach calculus, I try to explain to my students the historical motivations for logarithms, but I tend to get a bunch of blank stares. All they know about the (natural) logarithm function is that it is the inverse of the natural exponential function and that \[ \ln x = \int_1^x \frac{1}{t}\, dt.\]

They usually remember (after I remind them) that the log of a product is the sum of the logs, and that this can be somewhat useful for differentiating products of functions, but that's it.

There's been a lot of talk lately about whether or not algebra is necessary. I mean, it's hard, and we don't want to make it a stumbling block, right? I won't make a counterargument here (but here's a good one). But I do know from years of experience teaching college students that their difficulties with calculus arise from their mediocre algebra skills, not the calculus itself. Perhaps it's time to return to a more solid algebra I - geometry - algebra II - trig/precalculus high school sequence, including all kinds of fun stuff like logarithms, to better prepare students for success later.

I'll conclude by pointing out that logarithms are not just an abstraction used to simplify calculations, but they are built into the way our brains work. Our ears perceive sound on a roughly logarithmic scale, and the Weber-Fechner law quantifies this. Or, check out this, in which scientists from MIT determined that children, when asked what number is halfway between 1 and 9 usually answer 3, which is halfway logarithmically. This suggests that logarithmic scales are hardwired in us, so maybe we should understand them better.